Syntactic Analysis

A poor mans analysis

Table of Contents

This lecture is about Syntactic Analysis, a static analysis which is only analyses programs at the structure level. We'll see that it allows us to build some analysis relatively easy, but fails at others.

What is Syntactic Analysis? §1

As mentioned in the introduction, a syntactic analysis analyses the structure of a program, instead of looking at the meaning of it. For example, in syntactic analysis 2 + 1 is different from 1 + 2. This makes it ideal for capturing the intent of the developer, but not great for detecting complex logical bugs.

Many static analyses

in the wild, often called linters, are simply syntactic analyses, matching patterns in the code. They do this for two reasons. The first is that it is easier than to develop and maintain and the second is that gives better feedback to the developer, as you can highlight the pattern in the source.

The Levels of Syntax §1.1

The levels of syntactic matches can range from matching the bits, to matching the final compiled bytecode.

When matching syntax it is important to match at the right level of abstraction. Initial levels contain more of the developers intention, but later levels gives us more structure, making the analysis more robust and easier to write.

We can start by analysing the text directly, this allows us to extract information about the white-space of the program. However, if we don't think that the white-space is important for the analysis, we can look at the tokens instead. The tokens is a list of elements matched by the parser to group patterns like integer literals 1232 and 232, so that they appear the same to the parser.

The parser converts the list of tokens into a Concrete Syntax Tree. A CST is the collection of all the tokens of the program, but placed in a tree like structure. For example 10 + (5 + 3) is turned into a tree like (parenthesis (+ 10 (parenthesis (+ 5 3)))) instead. This deals with precedence and other headaches we don't want to think about. E.g ., is 10 + 3 * 5 equal to 10 + (3 * 5) or (10 + 3) * 5?

In contrast to CST, Abstract Syntax Tree does not have to follow directly from the structure of the text. This allows the AST to remove seemingly unimportant information from the tree. E.g ., parenthesis: (( 10 )) and ( 10 ) are both stored as 10.

The compiler then coverts the AST into a some kind of Intermediate Representation, this can be the LLVM-IR, ClangIR, or RTL. At this level, a lot of work has been done by the compiler to disambiguate references (making all variables fully qualified) and optimize some cases. x + 2 + 4 might now be mypackage.Class.x + 6, which makes some kinds of analysis easier.

Finally the IR is complied to Bytecode, now the style from the original language is gone, and only pure functionality is left.

While every step down the latter makes it easier to write analyses, we lose a little of the context and intention of the developer.

Preventing AND and OR Confusion

Say we want to warn users about using || and && in code because the precedence is often not known by developers. A good level to attack this is on the CST level, as we can differentiate between a && b || c and (a && b) || c. The developer would get a warning for the first example but not for the second.

An analysis at every level?

Come up with information which is best retrieved at every level.

Hint (Hover to see)

bits: The encoding of the file.

text: The number of characters or unknown tokens.

tokens: The number of tokens.

CST: Syntax level confusions, like the example above.

AST: Dangerous code constructions, like

query("SELECT FROM db WHERE name=" + user_input)IR: Uses of deprecated functions.

bytecode: Detection of unoptimized code.

Goal of Syntactic Analysis §1.2

Since a syntactic analysis can never be sound (accepting only good programs), we often have to aim for complete (rejecting no good program). This is in line with a study by Christakis (2016), that shows that most developers would rather have an unsound analysis than too many warnings. Here are two quotes from the findings:

Program analysis design should aim for a false-positive rate no higher than 15–20%.

— Christakis and Bird

When forced to choose between more bugs or fewer false positives, [developers] typically choose the latter.

— Christakis and Bird

What can we Pattern Match on? §2

Since most things we do in program analysis is undecidable, it is nice to have a basic understanding of what kinds of patterns we can pattern match on.

The set of all words or programs which match a pattern is in language theory called a language.

A Language — Formally Speaking §2.1

In Computer Science, we refer to a language as a set of a sequence of symbols from the alphabet. If a string is in language we refer to it as a word.

Language

A language over the alphabet , is a subset of all words from the alphabet .

The goal is automatically to figure out if a given word is in the language or not. We differentiate between Recognizers and Deciders. A decider, is a machine (or Automaton), which can determine if a string is in a language or not. A recognizer does the same thing, but is allowed to never give an answer. Essentially run forever.

The Halting problem is Recognizable

The halting problem is undecidable, but it is recognizable. We can run the program and if it terminates, we know it terminates. If it does not terminate ..., well we have to wait to see if it does.

We can describe every programming analysis problem as sub-language problem: For example, the language of all terminating java programs can be described like so:

It is also because of language theory, that we use sound and complete for static analyses as we do. In fact, we can see a static analysis as a decider for a sublanguage.

Sound:

Complete:

Production Rules and Grammars §2.2

In practice we define languages using Grammars. A grammar is a collection of production rules of the form , where are sequences of symbols (represented by , , and ) and nonterminals (represented by , , and ). They are called non-terminals as we will keep applying the production rules, rewriting the string that look like into until there no non-terminals left. Any string in a language is called a word, and the language is a programming language we call it a program.

We can now see if a word is in a language by generating all possible words in the language, and then checking if is in that set. This algorithm, clearly can run forever, so more efficient solutions are needed.

Grammar

A grammar is a quadruple , where is a set of non-terminals (, , ), is the alphabet, is a set of production rules, and is the start symbol.

The language of the grammar is:

Where means applying the rules in until no non-terminal is left.

This is best illustrated by an example.

Generate

Consider the grammar , where is defined like this:

It can generate the following word in the language

Some languages actually work like this, think about LaTeX and the C macro system.

What Types of Languages Exist? §2.3

Since syntactic analysis is simply matching strings, which languages can we efficiently match? This is where the Chomsky hierarchy comes in.

The Chomsky hierarchy, with production rules.

It separates languages into four categories of decreasing expressive power. On the top of the hierarchy, we type 3 languages. The type 3 language category is the most restrictive, and contain all languages definable by regular expressions. In practice, this only allows for repeats of small languages and cannot match nested parenthesis. Figuring out if a string is in a type 3 language is decidable.

In a type 2 language we can have words defined by nesting. This is very useful if you want nested parenthesis. This language is the basis of the syntax of most programming languages (except C: typedef I'm looking at you!). Type 2 languages can be recognized using a push down automaton.

In a type 1 language, we can define our language recursively, but we are also allowed to give context to the recursion. For example checking a program to see if a variable is in scope, is context sensitive because it depends on the declarations and how nested we are in the curly parenthesis:

int hello(int x) {

// Context { x , y }

int y;

{

int z;

// Context { x , y, z} is z in scope? Yes

}

// Context { x , y} is z in scope? No

}Finally, we have type 0 languages, is a set of words which can be recognized by a Turing machine. One example is all programs that terminate.

How do we Match Patterns in Practice? §3

In practice we use four kinds of matching systems when writing syntactic analyses. Regular expressions, Grammars and Parsers, Folds and Traversals and bespoke matching.

Regular: Regular Expressions §3.1

To match regular languages we can use regular expressions, they are extremely useful, common, and very fast (They can be recognized in ):

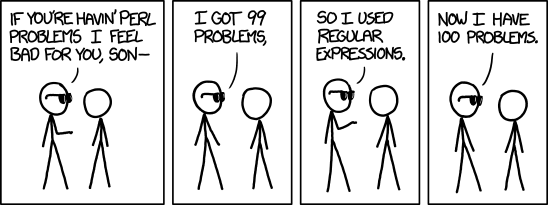

XKCD, source: https://xkcd.com/1171

The grammar for defining regular expressions.

The programs from this language can be executed to form sets of accepted words. We can easily define the meaning of the language using denotational semantics. Denotational semantics just means, giving an mathematical object meaning by mapping it to one we already know about.

where is the smallest solution to the equation.

The denotational semantics for regular expressions

In practice, this language is extended quite heavily to be able to easily write better matches. You can explore different flavors of the language and how it matches on https://regex101.com/. Here | is often used instead of +, and + is used to indicate one or more matches: .

Getting Started §3.1.1

First, we need to get a hold of the information about the method, we need to analyse.

Match the method names.

You might already have recognized that the method names form jpamb.cases.Simple.assertPositive(I)V a little language: jpamb.cases.Simple . assertPositive : (I) V where each part represent important information. The class, the method, the arguments, and the (return type).

Use https://regex101.com/ to try to match on the method. Here are some tricks:

remember to pick the flavor that matches your implementation language (in the menu).

use the cases from

https://github.com/kalhauge/jpamb/blob/main/stats/cases.txtas test cases (you do that by inserting it in the big box).you can use the quick references to help you write the expression.

remember that !'.' means everything, so use !'\.' to match !'.'.

use match groups to extract each part in your analysis.

Then, we'll need to actually inspect the code. First we need to figure out the correct file. We do that by replacing !'.' with !'/' or !'\' depending on the platform. We can also use regular expressions to match patterns which is not nested. Luckily, cases in our benchmark suite are never nested, so we can use regular expression to quite effectively find them.

Extract the code

Insert the code of jpamb.cases.Simple into the Test String

field, and try to extract the content of the methods.

Hint (Hover to see)

r"public\W+static\W+(?P<retype>void)\W+(?P<mname>\w*)()\W*{(?P<code>[^}]*)}"Finally, take a step back and think about what kinds of patterns which would be hard to match using this technique.

Extract the code

Think about if your solution still works:

on other files,

if you change the indentation or insert newlines,

change the methods from static to non-static, and

without the case statement.

Context-Free: Grammars and Parsers §3.2

At this point it should be clear that using regular expressions for matching a Context Free language is a bad idea. The problem of course happens when we want to match items nested within other items.

Instead we want to use a Parser. A parser is a structured program which goal is to take in a stream of tokens and turn them into a CST.

Parsers are often generated automatically from grammars, and the grammars are often written in what is known as Backus-Naur form. In programming language theory, this is the primary way to define the syntax of a programming language.

It is created by a set of productions (like with our grammar), but we allow each production to have multiple matches, separated by |: and B := a. The BNF is often extended with syntax for many and some . Furthermore, we often uses first-match semantics where we match on the first production we can. This makes the language deterministically context-free, which changes the complexity of parsing a program from to .

There are many categories of parsers, and to learn more about them you should take the 02247 Compilers course.

Using Tree-Sitter §3.2.1

In practice, we can take a look at the grammars written for the Tree-Sitter generator.

Lambda Calculus

To get an idea of how it works, we can encode the lambda calculus, as a tree sitter grammar.

We can see it contains the same elements as the grammar above but written in Javascript:

{

rules: {

source_file: $ => $._exp,

_exp: $ => choice($.abs, $.app, $.var, $._parens),

abs: $ => prec.right(seq('λ', $.var, '.', $._exp)),

app: $ => prec.left(seq($._exp, $._exp)),

var: $ => /[a-zA-Z]+/,

_parens: $ => choice("(", $._exp, ")"),

}

}In the above text, prec is used to define the precedence, meaning if the syntax is read left to right or right to left. E.g ., is a b c the same as (a b) c or a (b c).

The cool thing about Tree-Sitter is that there are defined grammars for most language you would like to analyse, and has bindings to many languages including Java, Python, Go and Rust. In this example we are going to use Python (but try your own).

Get syntaxer to work

Try to get syntaxer to work. At this point you should be able to run:

$ uv run solutions/syntaxer.py 'jpamb.cases.Simple.assertPositive:(I)V'The result of parsing a program is an parse tree, or a CST. You can play around with seeing different parse trees in the tree-sitter playground.

At this point we would like to use this technology to match patterns in the code, to which we have two options. One solution is to extend the grammar to explicitly add rules that detect common errors. E.g ., we can write a parse rule that matches x / 0, and assigns it to a div_error group. However this is cumbersome, and not easily done. Our second option is to match patterns using Tree Sitters support for Queries

, a built-in language for matching elements in the syntax tree. These queries are essentially regular expressions, but are matched at every level of the syntax tree.

Play around with Queries Tree-sitters playground

Go to https://tree-sitter.github.io/tree-sitter/7-playground.html and insert the code from jpamb.cases.Simple

Enable Query and try the following query:

(method_declaration name:

((identifier) @method-name (#eq? @method-name "assertBoolean"))

body: (_) @body

) @methodYou can write your own queries using the Query Syntax.

Try to extend syntaxer

Extend syntaxer to also warn about diving by 0 errors, you can do this by slightly increasing the probability that the code contains an devide by 0 error if you see / in the method, and slightly decrease it if you don't.

Hint (Hover to see)

Start with the query:

(binary_expression

operator: "/"

) @exprand debug with

public class Simple {

public static int divideByZero() {

return 1 / 0;

}

}Context Sensitive: Matching Patterns in Trees §3.3

One big limitation of Tree-sitter queries is that they do not currently support nested queries, and we need those if we want to match patterns with context.

Context is important

The context of the match is important, in the following code, we want to see if assertFalse contains a divide by 0 exception. But because our match matches every thing without context, we match the 1/0 in divideByZero by mistake.

public class Simple {

public static int assertFalse() {

assert False;

}

public static int divideByZero() {

return 1 / 0;

}

}In this case you have to write your own.

Folds: General Matching of Patterns in Trees §3.3.1

We call recursively matching a pattern on a tree structure a fold. Actually, there is whole discipline in math devoted to this problem called Abstract Algebra. Here they have spend a lot of effort categorizing all kinds of folds (and unfolds). Here we refer to patterns as Initial Algebras, and we can see them as the nodes of the tree, where each edge is replaced by a hole. E.g ., the initial algebra of a list of x's is . The most common is called a catamorphism which says I can reduce any structure X, with initial algebra F_X, given a function that given the algebra with have computed the value for all of your children, what is the value I should replace you with in your parent.

Tree Traversals §3.3.2

In practice, is general recursion often expensive in terms of speed and memory, luckily all recursions can be made into iterative traversals by mimicking the stack yourself. When we traverse we differentiate between pre and post orders. I.e., do we match for patterns on the way down or on the way up.

Pre- (red), In- (green), and Post-order (blue) traversal of tree. source

In our case, we can use the built-in Tree-sitter cursor to traverse the tree.

Pre or Post-order?

Consider the following traversal of the tree, which of the yield points yield a postorder and which yield a preorder?

def traverse(item: ts.Tree) -> Iterator[ts.Node]:

cursor = item.walk()

godeeper = True

while True:

node = cursor.node

if godeeper:

yield node # Pre or Post

if not cursor.goto_first_child():

godeeper = False

elif cursor.goto_next_sibling():

godeeper = True

elif cursor.goto_parent():

yield node # Pre or Post

godeeper = False

else:

breakRecursive Enumerable: Bespoke §3.4

To match languages beyond Context-Insensitive languages, there exist some tools, but it becomes harder and harder to match full languages efficiently. In this case you have to write bespoke analyses in your favorite language.

Almost all syntactic static analyses or linters

falls into this category. Here are some examples:

There is still interesting work in building an easy and intuitive system for pattern match normal programming constructs with recent work like Rafnsson (2020) and Tomasdottir (2020).

Another approach is to use LLMs. An LLM due to its fixed input window, can only recognize regular languages, however in practice, they tend to do well on simple analysis tasks because of its ability to recognize common patterns.

Until next time!

Until next time, write the best analysis you can and upload the results to Autolab, and ponder the following:

What are the limitations of a syntactic analysis?

When is syntactic analysis a good tool?

Trick Question: Is Java a regular language, context free or recursive enumerable?

Bibliography

Christakis, Maria; Bird, Christian (2016).

What developers want and need from program analysis: an empirical study.

doi:10.1145/2970276.2970347linkMeijer, Erik; Fokkinga, Maarten; Paterson, Ross (1991).

Functional programming with bananas, lenses, envelopes and barbed wire.

doi:10.1007/3540543961_7linkRafnsson, Willard; Giustolisi, Rosario; Kragerup, Mark; Høyrup, Mathias (2020).

Fixing Vulnerabilities Automatically with Linters.

doi:10.1007/978-3-030-65745-1_13linkTomasdottir, Kristin Fjola; Aniche, Mauricio; van Deursen, Arie (2020).

The Adoption of JavaScript Linters in Practice: A Case Study on ESLint.

doi:10.1109/TSE.2018.2871058linkVan Wyk, Eric R .; Schwerdfeger, August C . (2007).

Context-aware scanning for parsing extensible languages.

doi:10.1145/1289971.1289983linkWagner, Tim Allen (1997).

Practical algorithms for incremental software development environments.

linkWagner, Tim A .; Graham, Susan L . (1998).

Efficient and flexible incremental parsing.

doi:10.1145/293677.293678link